Community posts

Scoring: for fun and extra credit!

by Paul Denny

The University of Auckland

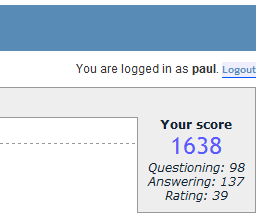

PeerWise includes several "game-like" elements (such as badges, points and leaderboards) which are designed primarily for fun and to inject a bit of friendly competition between students. As an example, students accumulate points as they make their contributions and their reputation score is displayed near the top right corner of the main menu.

In fact, if you have participated in your own course, perhaps you have noticed your score increasing over time?

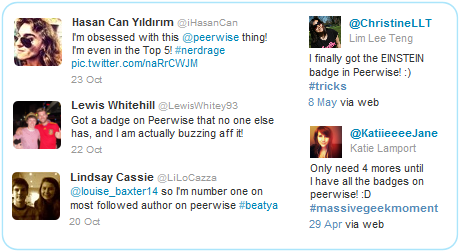

Of course, not all students are motivated by such things, but a quick search of recent Twitter posts reveals that some students really seem to enjoy earning the various virtual rewards that are on offer:

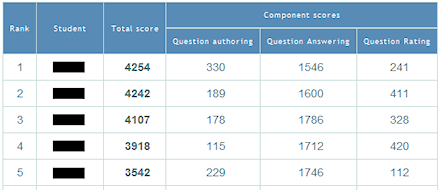

Some instructors have even considered using these elements to award "bonus marks" or "extra credit" as a way of motivating their students. Obtaining the data to verify that students have met certain goals is trivial - for example, instructors can view the scores of all of their students in real-time by selecting "View scores of all students" from the Administration menu:

The reputation score algorithm

The current scoring algorithm has two primary goals: firstly, to encourage students to contribute early (rather than leaving their contributions to immediately before a deadline) and secondly, to encourage students to make "good" contributions.

The overall score is broken into 3 components, "Question authoring", "Answering questions" and "Rating questions". One unusual feature of the scoring algorithm (and probably the one that prompts the most queries) is that a student's score does not increase based on their own actions, but it only increases when other students are active. First, lets consider the "Rating questions" component. Rather than receiving 1 point every time a student rates a question, a student will only receive a 1 "rating point" when another student comes along after them, and gives the same question the same rating as the original student.

This was chosen for the following reasons:

- it encourages students to participate early – the earlier you rate a question, the more students can potentially rate that question after you and hence the more points you can earn.

- it encourages students to rate fairly – you will generally collect more points if your rating decisions agree with the decisions of the students that come after you

Likewise, the "Answering questions" component increases by 1 every time someone correctly answers a question by selecting the same answer that you have previously selected. Once again, to get a large score it requires students to answer questions early, and answer them correctly.

The "Question authoring" score increases by 1, 2 or 3 everytime the questions is rated a "good", "very good" or "excellent" respectively.

The total reputation score (which appears on the Main Menu as shown in the image at the top of this page) is a combination of the component scores. The total score will be much higher for a student who receives good scores in all three components than for a student who has an excellent score in just one component – in other words, it favours students participating in all areas. The actual calculation is the product of the logarithms of the component scores.

Given the way the component scores are calculated, the total scores that are achievable depend heavily on the total number of students in the class.

Typical scores

However, one difficulty with using the score for awarding such credit is that coming up with realistic target scores is complicated by the way the scoring algorithm works. Basically this algorithm rewards students for making contributions that are valued by their peers. In order to achieve the highest possible score, a student must make regular contributions and:

- author questions that their peers rate highly

- answer questions correctly before their peers

- rate questions as they are subsequently rated by their peers

What this means is the total number of points that a student can earn depends on how often their classmates endorse their contributions, and this is dependent not only on the size of the class but also on the "requirements" placed on the activity by the course instructor. This makes it tricky to set reasonable score targets for students to reach. Recently, a PeerWise member Brad Wyble raised exactly this point:

I'm interesting in linking the peerwise score to extra credit points but I'm a little stuck on how to proceed without an idea of what the range of possible values might be. I don't need to know an exact number, but is it possible to provide a rough estimate given a course of size 60? And how would this estimate change for a size of 150?

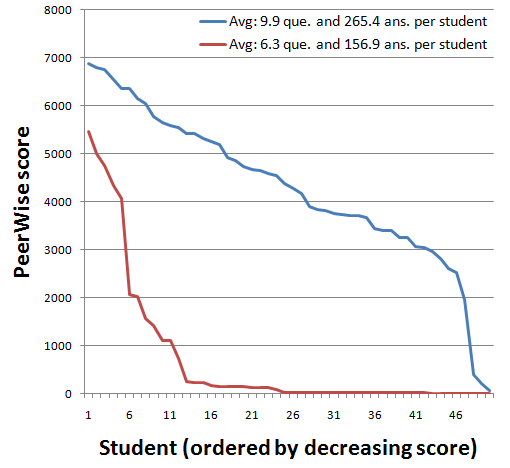

So, what is the typical range of PeerWise scores for a class of a given size? Let's start with a class of 50 students. It turns out the range can be quite wide, as exemplified by the two extreme cases in the figure below (each line represents the set of scores for a single class of 50 students - the average number of questions authored and answers submitted by students in each class are shown in the legend):

Not only were students in the "blue" class all highly active, but almost all of them made contributions in each of the three areas required for maximising the score: question authoring, answering questions, and rating questions. On the other hand, students in the "red" class were all quite active in answering questions, but only a few students in this class were active in all three areas. In fact, only the first 12 students had non-zero component scores for each of the three components. The remaining students scored 0 for the question authoring component (most likely because they chose not author any questions). Students 13-24 in the figure had component scores only for question authoring and rating questions, whereas the remaining students (all below student 25) only had a single component score (for answering questions). These students chose not to evaluate any of the questions they answered, and ended up with very low total scores (even though in some cases they may have answered many questions).

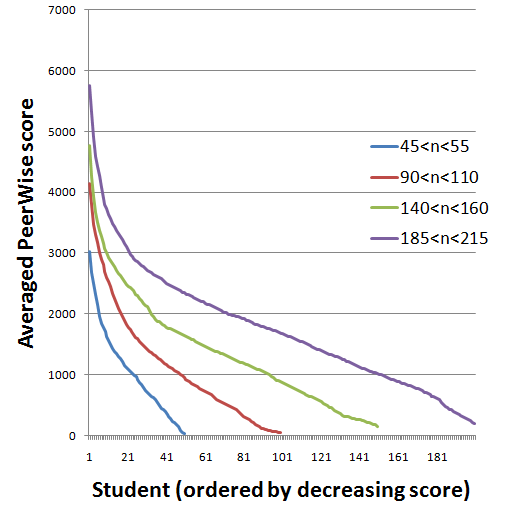

To calculate a "typical" range of scores for classes of varying sizes, we can average the class scores over a number of classes. For example, to calculate the typical range for classes of approximately 200 students, a set of 20 classes were selected (where class sizes ranged from 185 to 215) and the student scores for each class were listed in descending order. To calculate the average "top score", the top score in each of the 20 classes was averaged. Likewise for the second top score, and so on, averages were calculated in decreasing order for all remaining scores. The figure below plots the average set of scores for classes of varying sizes (approximately 50, 100, 150 and 200) by averaging the class scores across a series of sample courses (in each calculation, between 15 and 20 classes were examined).

Brad also makes the following point in his forum post:

I suppose that another option would be to compute the grading scheme at the end of the semester once we see what the distribution of point values are.

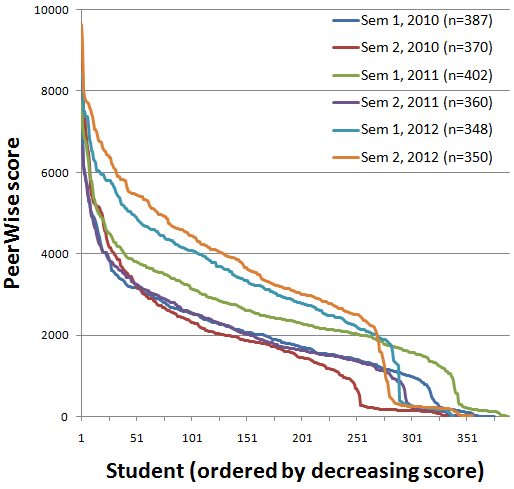

This is an excellent idea - in many cases, the range of scores for a given course appear to be fairly consistent from one semester to the next (assuming the class size and participation requirements do not vary greatly). The figure below plots the set of PeerWise scores for one particular course over 6 different semesters. The class size was fairly consistent (around 350-400 students) and although the scores do vary, there is probably enough consistency to give instructors in future semesters some idea of what to expect (which may help them define targets for awarding bonus marks or extra credit).

In this class, only the very top few students achieve scores above 6000. It is interesting to note towards the right hand edge of the chart, the very sharp drops in the curves correspond to the students who have not made contributions in each of the three areas. Earning points in each of the question authoring, answering questions, and rating questions components is critical to achieving a good score - and it is probably important for instructors to emphasise this to students (although this information is shown when students hover their mouse over the score on the main menu).

Has anyone tried using the points (or the badges) as a way of rewarding students with extra credit or bonus marks? It would be interesting to hear of your experience - please share!